How Brainly Avoids Workflow Bottlenecks With Automated Tracking

Brainly is the leading learning platform worldwide, with the most extensive Knowledge Base for all school subjects and grades. Each month over 350 million students, parents, and educators rely on Brainly as the proven platform to accelerate understanding and learning.

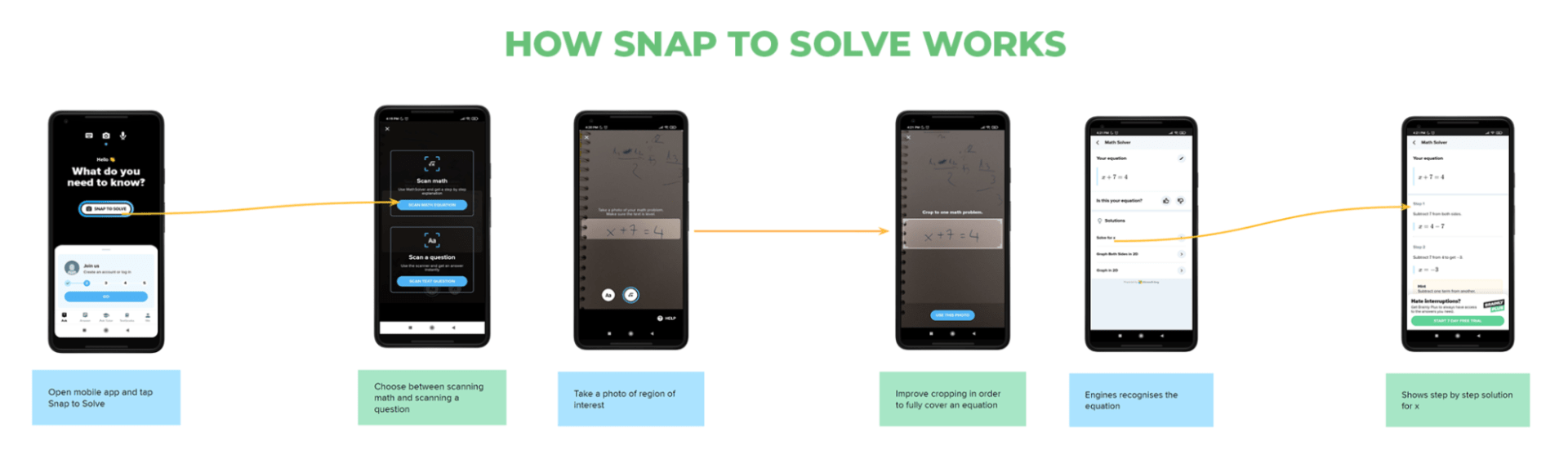

One of their core features and key entry points is Snap to Solve. It’s a machine learning-powered feature that lets users take and upload a photo. Snap to Solve then detects the question or problem in that photo and provides solutions.

This case study focuses on the Visual Search team that works on the Visual Content Extraction (VICE) system for the Snap to Solve product.

The challenge

The team uses Amazon SageMaker to run their computing workloads and serve their models.

They run training jobs in their pipelines to solve different problems connected to the same product. For instance, one job could be training the main models that detect objects in a scene; other jobs could be training auxiliary models for matching features, detecting edges, or cropping objects.

When the number of training runs on the team’s large compute architectures increased, they realized that their logs from Amazon SageMaker needed to be trackable and manageable, or it would cause bottlenecks in their workflow. “We are running our training jobs through SageMaker Pipelines, and to make it reproducible, we need to log each parameter when we launch the training job with SageMaker Pipeline,” says Mateusz Opala, Senior Machine Learning Engineer at Brainly.

Seamless integration with Amazon SageMaker for tracking at scale

While the team tried leveraging SageMaker Experiments to track experiments, they didn’t like the tracking UX and Python client. And they needed a purpose-built tool that could scale regardless of the experiment volume.

Neptune did check these boxes.

Neptune’s UI and the front end work great, and you don’t feel that you have to ‘fight’ with it. So instead of ‘fighting’ the tool, the tool itself is helping.

The team could nicely integrate Neptune with their technology stack and their CD pipeline.

They developed a custom template to connect Neptune with Amazon SageMaker Pipelines using the `NEPTUNE_CUSTOM_RUN_ID` feature.

First, they set the environment variable NEPTUNE_CUSTOM_RUN_ID to some unique identifier. Then, whenever they launch a new training job (through a Python script) from their local machine or in AWS, it will tell Neptune that the jobs started with the same NEPTUNE_CUSTOM_RUN_ID value should be treated as a single run.

This way, they can log and retrieve experiment metadata and usage metrics for the whole computational pipeline (for example, the data pre-processing and training jobs) in a single run. It helps the team organize their work efficiently and ensures they can easily reproduce their experiments from SageMaker Pipelines.

“By logging the most crucial parameters, we can go back in time and see how it worked in the past for us, and this, I would say, is precious knowledge. On the other hand, if we track the experiments manually, that will never be the case, as we would only know what we thought we saw,” says Hubert Bryłkowski, Senior Machine Learning Engineer at Brainly.

Whatever they track this way, anyone on the team or organization can easily analyze in the intuitive UI.

As Gianmario Spacagna, the Director of AI at Brainly, says, “An important detail that we considered when we decided to choose Neptune is that we can invite everybody on Neptune, even non-technical people like product managers — there is no limitation on the users. This is great because, on AWS, you’d need to get an additional AWS account, and for other experiment tracking tools, you may need to acquire a per-user license.”

All paid plans in Neptune include an unlimited number of users—this, together with collaboration features such as sharing UI views through persistent links, enables much better visibility across the organization.

Debugging and optimizing computational resource consumption

The team often uses multi-GPU training to run distributed training jobs. Running large data processing jobs on distributed clusters is one of the most compute-intensive tasks for the team. So they wanted to optimize it.

One thing they tried was to test whether multithreading would be better than multiprocessing for their processing jobs. After a few trials and monitoring the jobs in Neptune, they could see which approach works better.

When they trained a model on multiple GPUs, they would lose much time copying images from the CPU or running data augmentation on the CPU. So they decided to optimize their data augmentation jobs by decompressing JPEGs and moving from plain TensorFlow and Keras to Nvidia DALI. This improved data processing speed and they could observe this improvement with Neptune’s resource usage monitoring.

Neptune helped them monitor the processes through the UI, and understand how their image data augmentation programs utilized resources. They could see which processes were adequately using the resources and those that were not — for example, in the case of choosing multiprocessing over multithreading for processing jobs.

Neptune’s monitoring features played a big role in maximizing the team’s GPU usage. “I would say Neptune makes it easier for us to get deeper insights into resource utilization than would have been provided by a Cloud vendor or wherever our jobs are running,” adds Hubert.

The results

- Implemented efficient and standardized tracking across all SageMaker training pipelines.

- Enhanced reproducibility of machine learning experiments.

- Optimized multi-GPU resource utilization and improved efficiency.

- Enabled easy access for non-technical stakeholders.

Thanks to Hubert Bryłkowski, Mateusz Opala, and Gianmario Spacagna for working with us to create this case study!